How Data Normalization Works

In financial markets, data comes from many sources: exchanges, alternative trading systems, news providers, and market participants. Each of these sources use different formats, field names, and conventions, creating challenges for traders and systems that need consistent, reliable information.

Data normalization is the process of standardizing and structuring raw data so it can be easily used by trading systems, analytics tools, and risk platforms. It provides information that market participants can interpret, compare, and act on. .

Normalization is not about making trading decisions; it is about making data accurate, consistent and usable.

What Data Normalization Is

Data normalization transforms incoming market data into a uniform format. This allows different systems to consume, process, and display information consistently.

Normalization addresses issues such as:

- Inconsistent field names: For example, “LastPrice” vs. “PX_LAST” across vendors

- Varied message structures: Different feeds may organize order book or trade data differently

- Different Trading Symbol Formats: For example, "BRK.A" or "BRKA" (without the dot) or "BRK/A" (with a slash). Also Apple Inc. shares are commonly shown as "AAPL" in retail platforms but in Reuters it's formatted as "AAPL.O" (appending ".O" to indicate NASDAQ listing). S&P 500 Index is quoted as ^GSPC in Yahoo Finance (with a caret prefix for indices) as opposed to in Reuters, where it appears as ".SPX" (with a dot prefix), while some terminals use "SPX" alone.

- Time and date formats: Ensuring timestamps are comparable across sources such as Day, Month, Year versus Month, Day, Year.

- Currency and unit conversions: Standardizing prices and volumes

Without normalization, downstream systems would have to handle inconsistencies individually, increasing complexity, risk, and processing time.

Why Normalization Matters

For traders and trading systems, normalized data provides several advantages:

- Clarity: Allows everyone to interpret the same information in the same way

- Efficiency: Systems can process data faster without custom parsing for each source

- Comparability: Multiple sources can be aggregated for a comprehensive market view

- Reliability: Reduced risk of errors due to variations in fields, units, or timestamps

Normalization is particularly important in high-speed trading, where even small discrepancies can affect analytics, monitoring, and automated responses.

How Data Normalization Works

The process typically involves several stages:

1. Data Ingestion

Market data is first ingested from multiple sources. These sources may include:

- Exchange feeds (both direct and consolidated)

- Broker or vendor feeds

- Reference data providers

- News and research services

Each source has its own structure, protocols, and messaging formats.

2. Parsing

Incoming messages are parsed to extract relevant fields. Parsing converts data from raw messages into structured records.

Key considerations include:

- Handling multiple message types (trades, quotes, order books)

- Recognizing source-specific formats

- Maintaining sequence and timing information for each message

3. Standardization

Once parsed, data fields are mapped to a standard internal schema. This involves:

- Aligning field names (e.g., standardizing “bidPrice” vs. “BidPx”)

- Normalizing data types (numbers, timestamps, strings)

- Ensuring consistent units (e.g., shares, contracts, currency)

Standardization is crucial for downstream systems to process data consistently and without additional conversion logic.

4. Validation and Cleaning

Normalized data is validated to detect errors, missing fields, or outliers. This stage may include:

- Checking for duplicate or missing messages

- Confirming timestamp consistency

- Flagging anomalous price or volume values

Data Cleaning provides reliable, complete information that moves forward for use in trading, monitoring, and reporting systems.

5. Enrichment (Optional)

While strictly speaking, normalization focuses on standardizing raw data, some systems also enrich the data with additional context:

- Calculated metrics (e.g., mid-price, spread)

- Reference data (e.g., security identifiers, exchange codes)

- Timestamps aligned across multiple sources

Enrichment complements normalization but remains distinct: normalization ensures consistency, while enrichment adds contextual value for analysis.

Observing Normalization in Action

Traders may notice the effects of data normalization indirectly:

- Consistent display of bid-ask spreads across different trading platforms

- Alignment of timestamps when comparing trades from multiple exchanges

- Aggregation of multiple venue quotes in a single view without confusion

- Smooth integration with algorithmic or analytics systems

Normalization allows traders to focus on market insights rather than data inconsistencies.

Common Challenges

Even with modern technology, normalization has operational challenges:

- High message volumes: Exchanges can generate thousands of updates per second, requiring fast processing

- Source differences: Different exchanges may introduce new fields, formats, or protocols

- Latency sensitivity: Normalization must occur quickly enough to avoid latency in data delivery to trading systems

- Error handling: Systems must flag or correct anomalies without dropping critical messages

Proper architecture, monitoring, and redundancy are essential to handle these challenges effectively.

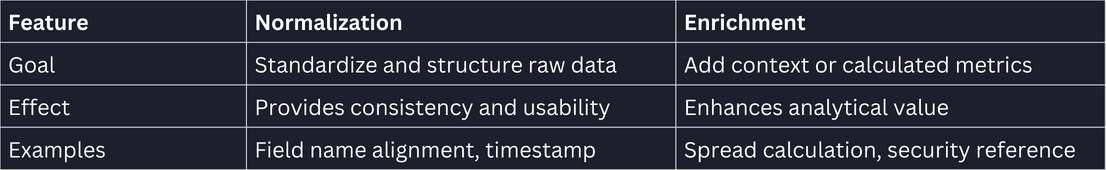

Normalization vs. Enrichment

It’s important to distinguish normalization from enrichment:

Understanding the difference helps traders and developers know which systems are responsible for which aspects of data handling.

Role in Trading Ecosystem

Normalized data underpins many aspects of trading:

- Risk management: Accurate, consistent data is critical for position and exposure calculations

- Algorithmic trading: Automated strategies rely on standardized feeds to function correctly

- Analytics and monitoring: Dashboards and alerts are only effective if data is consistent and clean

- Compliance reporting: Regulators require accurate, timestamped, and complete trade and market data

Without data normalization, systems would be prone to errors, inconsistencies, and operational inefficiencies.

Summary

Data normalization is the process of transforming raw market data into a consistent, structured, and usable format. It is distinct from execution, access, or trading strategy — it ensures that market information from multiple sources can be interpreted, aggregated, and acted on reliably.

For traders and firms, normalization enhances clarity, reduces operational risk, and enables faster, more accurate analysis. By providing a consistent foundation for market data, normalization supports everything from analytics dashboards to automated trading systems, forming a critical layer in the modern trading ecosystem.

© 2026 Securities are offered by Lime Trading Corp., member FINRA, SIPC, NFA. Past performance is not necessarily indicative of future results.

All investing incurs risk including, but not limited to, the loss of principal. Additional information may be found on our Disclosures Page. This material in this communication is not a solicitation to provide services to customers in any jurisdiction in which Lime Trading is not approved to conduct business. This communication has been prepared for informational purposes only and is based upon information obtained from sources believed to be reliable and accurate; however, Lime Trading Corp. does not warrant its accuracy and assumes no responsibility for any errors or omissions. The information provided is not an offer to sell or a solicitation of an offer to buy any security or a recommendation to follow a specific trading strategy. Lime Trading Corp. does not provide investment advice. This material does not and is not intended to consider the particular financial conditions, investment objectives, or requirements of individual customers. Before acting on this material, you should consider whether it is suitable for your particular circumstances and, as necessary, seek professional advice.